Curriculum Vitae

Timeline

-

2018-present

Tesla Autopilot

Senior Staff Machine Learning ScientistTraining production neural networks for Tesla's active safety and full self driving products.

-

2012-2018

Stanford University

Ph.D. Neuroscience

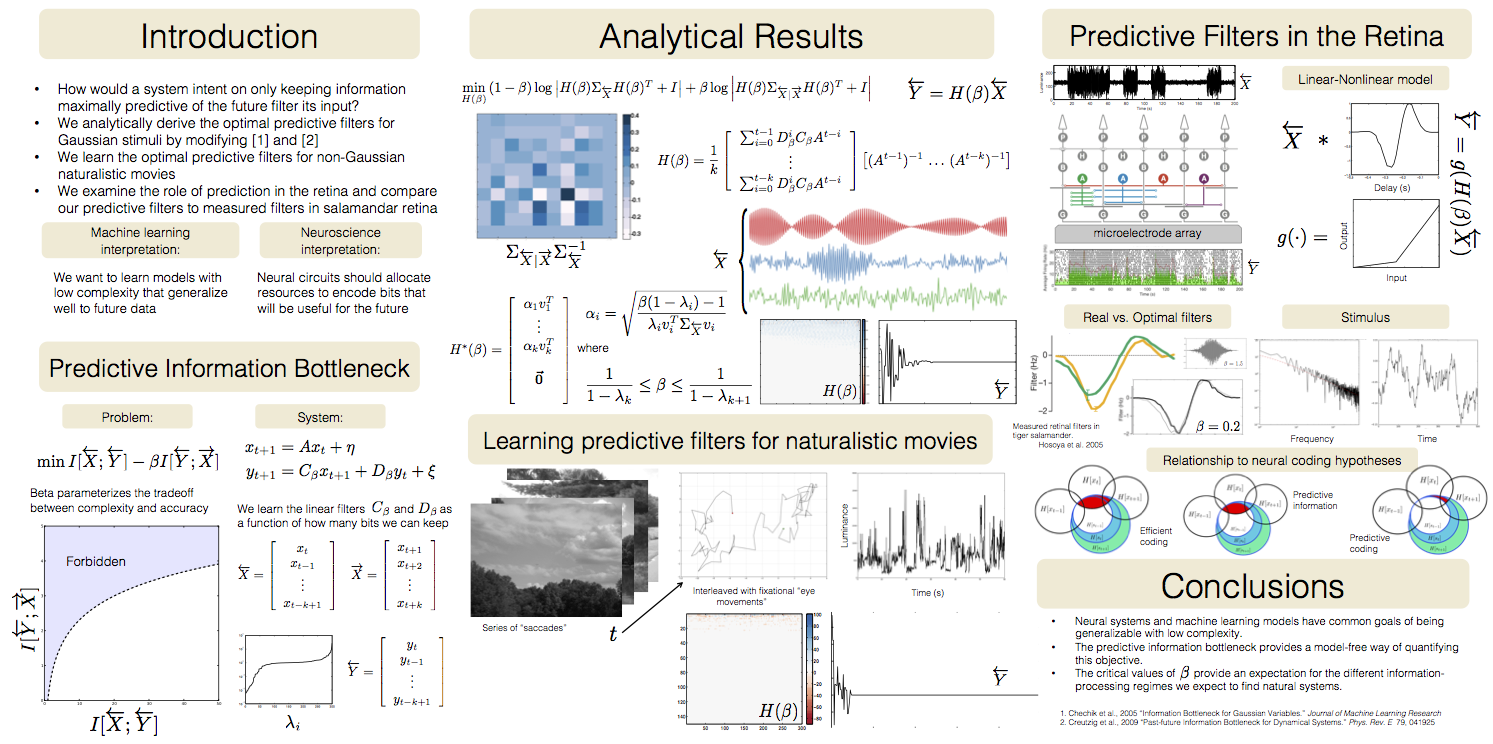

Ph.D. Minor Computer ScienceAdvisors: Steve Baccus and Surya Ganguli

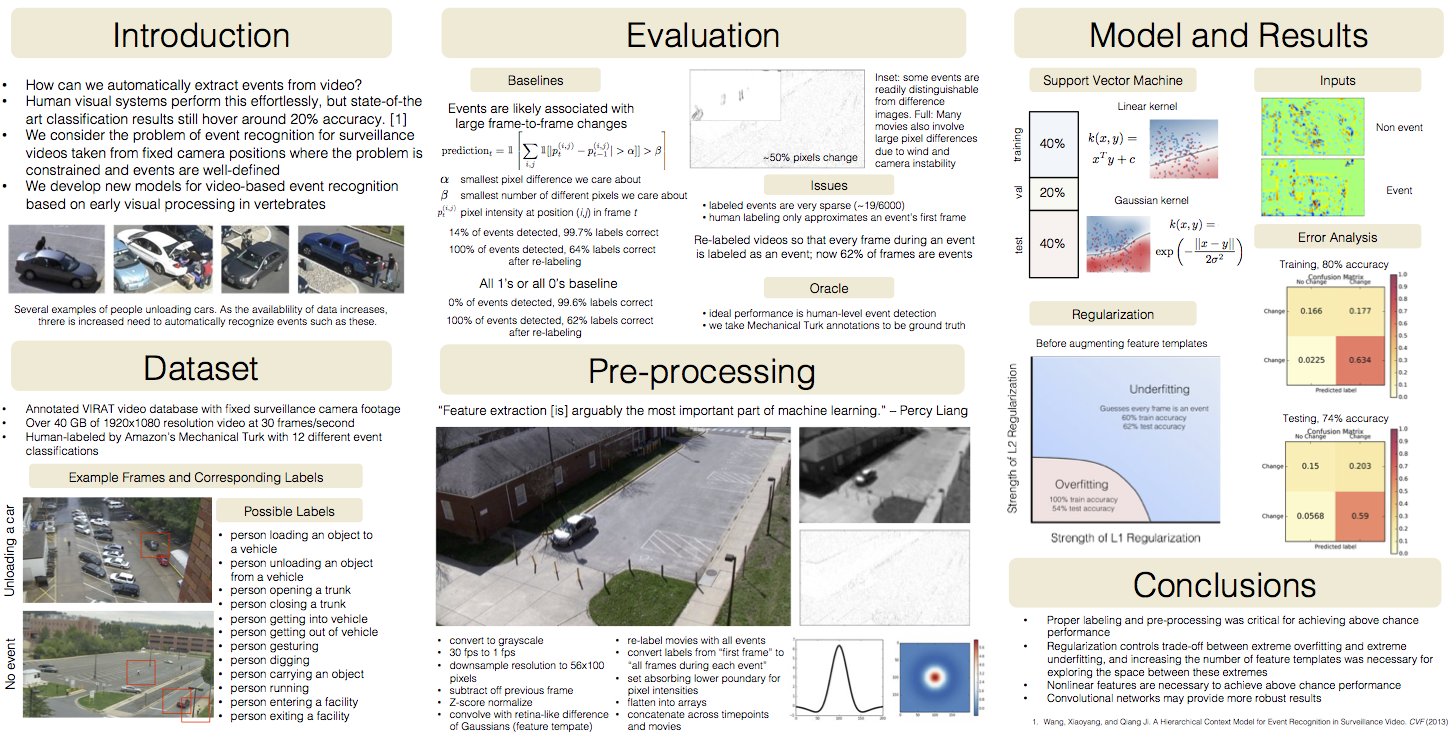

NVIDIA Best Poster Award, SCIEN 2015

Top 10% Poster Award, CS231N CNNs

Ruth L. Kirschstein National Research Service Award

Mind, Brain, and Computation Traineeship

NSF IGERT Graduate Fellowship -

2017

Google Brain

Software Engineer InternArtificial intelligence research in computer vision

Mentors: Jon Shlens and David Sussillo

Publication: Recurrent segmentation for variable computational budgets -

2010-2012

University of Hawaii

M.A. MathematicsAdvisor: Susanne Still, Machine Learning Group

Departmental Merit Award

NSF SUPER-M Graduate Fellowship

Kotaro Kodama Scholarship

Graduate Teaching Fellowship -

2006-2010

University of Chicago

B.A. Computational NeuroscienceResearch: MacLean Comp. Neuroscience Lab

Research: Dept. of Economics Neuroecon. Group

Research: Gallo Memory Lab

Lerman-Neubauer Junior Teaching Fellowship

NIH Neuroscience and Neuroengineering Fellowship

Innovative Funding Strategy Award -

2009

Institute for Advanced Study

Undergraduate Research FellowBioinformatics research at Simons Center for Systems Biology in Princeton, NJ

-

Past-2006

Originally from

San DiegoValedictorian

Bank of America Mathematics Award

President's Gold Educational Excellence Award

California Scholarship Federation Gold Seal

Advanced Placement Scholar with Distinction